| Revision as of 21:38, 13 September 2020 editMiaumee (talk | contribs)Extended confirmed users765 edits + "," to break verbose phrases. Minor C/E fixes. + inline refs. - dup empty lines. "occurrencies" -> "occurrences". Typeset inline math properly. Lc "categorical distribution". + cm on geometric distribution.Tags: Reverted Visual edit← Previous edit | Revision as of 15:33, 21 September 2020 edit undoJayBeeEll (talk | contribs)Extended confirmed users, New page reviewers28,266 edits Reverted 1 edit by Miaumee (talk): Per User talk:Miaumee, this is apparently the preferred response to poor editingTags: Twinkle UndoNext edit → | ||

| Line 1: | Line 1: | ||

| {{Short description|Discrete-variable probability distribution}} | {{Short description|Discrete-variable probability distribution}} | ||

| ] | ] | ||

| In ] and ], a '''probability mass function''' ('''PMF''') |

In ] and ], a '''probability mass function''' ('''PMF''') is a function that gives the probability that a ] is exactly equal to some value.<ref>{{cite book|author=Stewart, William J.|title=Probability, Markov Chains, Queues, and Simulation: The Mathematical Basis of Performance Modeling|publisher=Princeton University Press|year=2011|isbn=978-1-4008-3281-1|page=105|url=https://books.google.com/books?id=ZfRyBS1WbAQC&pg=PT105}}</ref> Sometimes it is also known as the discrete density function. The probability mass function is often the primary means of defining a ], and such functions exist for either ] or ]s whose ] is discrete. | ||

| A probability mass function differs from a ] (PDF) in that the latter is associated with continuous rather than discrete random variables. A PDF must be ] over an interval to yield a probability.<ref name=":0">{{Cite book|title=A modern introduction to probability and statistics : understanding why and how|date=2005|publisher=Springer|others=Dekking, Michel, 1946-|isbn=978-1-85233-896-1|location=London|oclc=262680588}}</ref> | A probability mass function differs from a ] (PDF) in that the latter is associated with continuous rather than discrete random variables. A PDF must be ] over an interval to yield a probability.<ref name=":0">{{Cite book|title=A modern introduction to probability and statistics : understanding why and how|date=2005|publisher=Springer|others=Dekking, Michel, 1946-|isbn=978-1-85233-896-1|location=London|oclc=262680588}}</ref> | ||

| The value of the random variable |

The value of the random variable having the largest probability mass is called the ]. | ||

| ==Formal definition== | ==Formal definition== | ||

| Probability mass function is the probability distribution of a discrete random variable, and provides the possible values and their associated probabilities. It is the function <math>p:\mathbb{\R}</math> <math>\rightarrow </math> defined by |

Probability mass function is the probability distribution of a discrete random variable, and provides the possible values and their associated probabilities. It is the function <math>p:\mathbb{\R}</math> <math>\rightarrow </math> defined by | ||

| {{Equation box 1 | {{Equation box 1 | ||

| |indent = | |indent = | ||

| Line 20: | Line 20: | ||

| |background colour=#F5FFFA}} | |background colour=#F5FFFA}} | ||

| for <math>-\infin < x < \infin</math>,<ref name=":0" /> where <math>P</math> is a ]. <math>p_X(x)</math> can also be simplified as <math>p(x)</math>.<ref>{{Cite book|title=Engineering optimization : theory and practice|last=Rao, Singiresu S., 1944-|date=1996|publisher=Wiley|isbn=0-471-55034-5|edition=3rd|location=New York|oclc=62080932}}</ref |

for <math>-\infin < x < \infin</math>,<ref name=":0" /> where <math>P</math> is a ]. <math>p_X(x)</math> can also be simplified as <math>p(x)</math>.<ref>{{Cite book|title=Engineering optimization : theory and practice|last=Rao, Singiresu S., 1944-|date=1996|publisher=Wiley|isbn=0-471-55034-5|edition=3rd|location=New York|oclc=62080932}}</ref> | ||

| The probabilities associated with each possible values must be positive and sum up to 1. For all other values, the probabilities need to be 0. | The probabilities associated with each possible values must be positive and sum up to 1. For all other values, the probabilities need to be 0. | ||

| Line 28: | Line 28: | ||

| :<math>p(x) = 0</math> for all other x | :<math>p(x) = 0</math> for all other x | ||

| Thinking of probability as mass |

Thinking of probability as mass helps to avoid mistakes since the physical mass is conserved as is the total probability for all hypothetical outcomes <math>x</math>. | ||

| ==Measure theoretic formulation== | ==Measure theoretic formulation== | ||

| Line 36: | Line 36: | ||

| Suppose that <math>(A, \mathcal A, P)</math> is a ] | Suppose that <math>(A, \mathcal A, P)</math> is a ] | ||

| and that <math>(B, \mathcal B)</math> is a measurable space whose underlying ] is discrete, so in particular contains singleton sets of <math>B</math>. In this setting, a random variable <math> X \colon A \to B</math> is discrete |

and that <math>(B, \mathcal B)</math> is a measurable space whose underlying ] is discrete, so in particular contains singleton sets of <math>B</math>. In this setting, a random variable <math> X \colon A \to B</math> is discrete provided its image is countable. | ||

| The ] <math>X_{*}(P)</math>—called a distribution of <math>X</math> in this context—is a probability measure on <math>B</math> whose restriction to singleton sets induces a probability mass function <math>f_X \colon B \to \mathbb R</math> |

The ] <math>X_{*}(P)</math>—called a distribution of <math>X</math> in this context—is a probability measure on <math>B</math> whose restriction to singleton sets induces a probability mass function <math>f_X \colon B \to \mathbb R</math> since <math>f_X(b)=P(X^{-1}(b))=(\{b\})</math> for each <math>b \in B</math>. | ||

| Now |

Now suppose that <math>(B, \mathcal B, \mu)</math> is a ] equipped with the counting measure μ. The probability density function <math>f</math> of <math>X</math> with respect to the counting measure, if it exists, is the ] of the pushforward measure of <math>X</math> (with respect to the counting measure), so <math> f = d X_*P / d \mu</math> and <math>f</math> is a function from <math>B</math> to the non-negative reals. As a consequence, for any <math>b \in B</math> we have | ||

| :<math>P(X=b)=P(X^{-1}( \{ b \} )) := \int_{X^{-1}(\{b \})} dP =</math><math>\int_{ \{b \}} f d \mu = f(b),</math> | :<math>P(X=b)=P(X^{-1}( \{ b \} )) := \int_{X^{-1}(\{b \})} dP =</math><math>\int_{ \{b \}} f d \mu = f(b),</math> | ||

| demonstrating that <math>f</math> is in fact a probability mass function. | demonstrating that <math>f</math> is in fact a probability mass function. | ||

| ⚫ | When there is a natural order among the potential outcomes <math>x</math>, it may be convenient to assign numerical values to them (or ''n''-tuples in case of a discrete ]) |

||

| ⚫ | When there is a natural order among the potential outcomes <math>x</math>, it may be convenient to assign numerical values to them (or ''n''-tuples in case of a discrete ]) and to consider also values not in the ] of <math>X</math>. That is, <math>f_X</math> may be defined for all ]s and <math>f_X(x)=0</math> for all <math>x \notin X(S)</math> as shown in the figure. | ||

| The image of <math>X</math> has a ] subset on which the probability mass function <math>f_X(x)</math> is one. Consequently, the probability mass function is zero for all but a countable number of values of <math>x</math>. | The image of <math>X</math> has a ] subset on which the probability mass function <math>f_X(x)</math> is one. Consequently, the probability mass function is zero for all but a countable number of values of <math>x</math>. | ||

| The discontinuity of probability mass functions is related to the fact that the ] of a discrete random variable is also discontinuous. If <math>X</math> is a discrete random variable, then <math> P(X = x) = 1</math> means that the casual event <math>(X = x)</math> is certain (it is true in the 100% of the |

The discontinuity of probability mass functions is related to the fact that the ] of a discrete random variable is also discontinuous. If <math>X</math> is a discrete random variable, then <math> P(X = x) = 1</math> means that the casual event <math>(X = x)</math> is certain (it is true in the 100% of the occurrencies); on the contrary, <math>P(X = x) = 0</math> means that the casual event <math>(X = x)</math> is always impossible. This statement isn't true for a ] <math>X</math>, for which <math>P(X = x) = 0</math> for any possible <math>x</math>: in fact, by definition, a continuous random variable can have an ] of possible values and thus the probability it has a single particular value ''x'' is equal to <math>\frac{1}{\infty} = 0</math>. ] is the process of converting a continuous random variable into a discrete one. | ||

| ==Examples== | ==Examples== | ||

| Line 54: | Line 56: | ||

| ===Finite=== | ===Finite=== | ||

| There are three major distributions associated, the ], the ] and the ]. | |||

| *], Ber(p), |

*], Ber(p), is used to model an experiment with only two possible outcomes. The two outcomes are often encoded as 1 and 0. | ||

| :<math>p_X(x) = \begin{cases} p, & \text{if }x\text{ is 1} \\ 1-p, & \text{if }x\text{ is 0} \end{cases}</math> | :<math>p_X(x) = \begin{cases} p, & \text{if }x\text{ is 1} \\ 1-p, & \text{if }x\text{ is 0} \end{cases}</math> | ||

| Line 62: | Line 64: | ||

| ::<math>p_X(x) = \begin{cases}\frac{1}{2}, &x \in \{0, 1\},\\0, &x \notin \{0, 1\}.\end{cases}</math> | ::<math>p_X(x) = \begin{cases}\frac{1}{2}, &x \in \{0, 1\},\\0, &x \notin \{0, 1\}.\end{cases}</math> | ||

| *], Bin(n,p), |

*], Bin(n,p), models the number of successes when someone draws n times with replacement. Each draw or experiment is independent, with two possible outcomes. The associated probability mass function is<math>\binom{n}{k}p^k (1-p)^{n-k}</math>. ]. All the numbers on the {{dice}} have an equal chance of appearing on top when the die stops rolling.]] | ||

| :An example of the Binomial distribution is the probability of getting exactly one 6 |

:An example of the Binomial distribution is the probability of getting exactly one 6 when someone rolls a fair die three times. | ||

| * |

*Geometric distribution describes the number of trials needed to get one success, denoted as Geo(p). Its probability mass function is <math>p_X(k) = (1-p)^{k-1} p</math>. | ||

| :An example is tossing the coin until the first head appears. | :An example is tossing the coin until the first head appears. | ||

| :: | |||

| Other distributions that can be modeled using a probability mass function are the ] (also known as the generalized Bernoulli distribution) |

Other distributions that can be modeled using a probability mass function are the ] (also known as the generalized Bernoulli distribution) and the ]. | ||

| * If the discrete distribution has two or more categories |

* If the discrete distribution has two or more categories one of which may occur, whether or not these categories have a natural ordering, when there is only a single trial (draw) this is a categorical distribution. | ||

| * An example of a ], and of its probability mass function, is provided by the ]. Here |

* An example of a ], and of its probability mass function, is provided by the ]. Here the multiple random variables are the numbers of successes in each of the categories after a given number of trials, and each non-zero probability mass gives the probability of a certain combination of numbers of successes in the various categories. | ||

| ===Infinite |

===Infinite=== | ||

| *The following exponentially declining distribution is an example of a distribution with an infinite number of possible outcomes—all the positive integers: | *The following exponentially declining distribution is an example of a distribution with an infinite number of possible outcomes—all the positive integers: | ||

| Line 88: | Line 91: | ||

| {{Main|Joint probability distribution}} | {{Main|Joint probability distribution}} | ||

| Two or more discrete random variables have a joint probability mass function, which gives the probability of each possible combination of realizations for the random variables. |

Two or more discrete random variables have a joint probability mass function, which gives the probability of each possible combination of realizations for the random variables. | ||

| ==References== | ==References== | ||

Revision as of 15:33, 21 September 2020

Discrete-variable probability distribution

In probability and statistics, a probability mass function (PMF) is a function that gives the probability that a discrete random variable is exactly equal to some value. Sometimes it is also known as the discrete density function. The probability mass function is often the primary means of defining a discrete probability distribution, and such functions exist for either scalar or multivariate random variables whose domain is discrete.

A probability mass function differs from a probability density function (PDF) in that the latter is associated with continuous rather than discrete random variables. A PDF must be integrated over an interval to yield a probability.

The value of the random variable having the largest probability mass is called the mode.

Formal definition

Probability mass function is the probability distribution of a discrete random variable, and provides the possible values and their associated probabilities. It is the function defined by

for , where is a probability measure. can also be simplified as .

The probabilities associated with each possible values must be positive and sum up to 1. For all other values, the probabilities need to be 0.

- for all other x

Thinking of probability as mass helps to avoid mistakes since the physical mass is conserved as is the total probability for all hypothetical outcomes .

Measure theoretic formulation

A probability mass function of a discrete random variable can be seen as a special case of two more general measure theoretic constructions: the distribution of and the probability density function of with respect to the counting measure. We make this more precise below.

Suppose that is a probability space and that is a measurable space whose underlying σ-algebra is discrete, so in particular contains singleton sets of . In this setting, a random variable is discrete provided its image is countable. The pushforward measure —called a distribution of in this context—is a probability measure on whose restriction to singleton sets induces a probability mass function since for each .

Now suppose that is a measure space equipped with the counting measure μ. The probability density function of with respect to the counting measure, if it exists, is the Radon–Nikodym derivative of the pushforward measure of (with respect to the counting measure), so and is a function from to the non-negative reals. As a consequence, for any we have

demonstrating that is in fact a probability mass function.

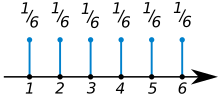

When there is a natural order among the potential outcomes , it may be convenient to assign numerical values to them (or n-tuples in case of a discrete multivariate random variable) and to consider also values not in the image of . That is, may be defined for all real numbers and for all as shown in the figure.

The image of has a countable subset on which the probability mass function is one. Consequently, the probability mass function is zero for all but a countable number of values of .

The discontinuity of probability mass functions is related to the fact that the cumulative distribution function of a discrete random variable is also discontinuous. If is a discrete random variable, then means that the casual event is certain (it is true in the 100% of the occurrencies); on the contrary, means that the casual event is always impossible. This statement isn't true for a continuous random variable , for which for any possible : in fact, by definition, a continuous random variable can have an infinite set of possible values and thus the probability it has a single particular value x is equal to . Discretization is the process of converting a continuous random variable into a discrete one.

Examples

Main articles: Bernoulli distribution, Binomial distribution, and Geometric distributionFinite

There are three major distributions associated, the Bernoulli distribution, the Binomial distribution and the geometric distribution.

- Bernoulli distribution, Ber(p), is used to model an experiment with only two possible outcomes. The two outcomes are often encoded as 1 and 0.

- An example of the Bernoulli distribution is tossing a coin. Suppose that is the sample space of all outcomes of a single toss of a fair coin, and is the random variable defined on assigning 0 to the category "tails" and 1 to the category "heads". Since the coin is fair, the probability mass function is

- Binomial distribution, Bin(n,p), models the number of successes when someone draws n times with replacement. Each draw or experiment is independent, with two possible outcomes. The associated probability mass function is.

The probability mass function of a fair die. All the numbers on the Template:Dice have an equal chance of appearing on top when the die stops rolling.

- An example of the Binomial distribution is the probability of getting exactly one 6 when someone rolls a fair die three times.

- Geometric distribution describes the number of trials needed to get one success, denoted as Geo(p). Its probability mass function is .

- An example is tossing the coin until the first head appears.

Other distributions that can be modeled using a probability mass function are the Categorical distribution (also known as the generalized Bernoulli distribution) and the multinomial distribution.

- If the discrete distribution has two or more categories one of which may occur, whether or not these categories have a natural ordering, when there is only a single trial (draw) this is a categorical distribution.

- An example of a multivariate discrete distribution, and of its probability mass function, is provided by the multinomial distribution. Here the multiple random variables are the numbers of successes in each of the categories after a given number of trials, and each non-zero probability mass gives the probability of a certain combination of numbers of successes in the various categories.

Infinite

- The following exponentially declining distribution is an example of a distribution with an infinite number of possible outcomes—all the positive integers:

- Despite the infinite number of possible outcomes, the total probability mass is 1/2 + 1/4 + 1/8 + ... = 1, satisfying the unit total probability requirement for a probability distribution.

Multivariate case

Main article: Joint probability distributionTwo or more discrete random variables have a joint probability mass function, which gives the probability of each possible combination of realizations for the random variables.

References

- Stewart, William J. (2011). Probability, Markov Chains, Queues, and Simulation: The Mathematical Basis of Performance Modeling. Princeton University Press. p. 105. ISBN 978-1-4008-3281-1.

- ^ A modern introduction to probability and statistics : understanding why and how. Dekking, Michel, 1946-. London: Springer. 2005. ISBN 978-1-85233-896-1. OCLC 262680588.

{{cite book}}: CS1 maint: others (link) - Rao, Singiresu S., 1944- (1996). Engineering optimization : theory and practice (3rd ed.). New York: Wiley. ISBN 0-471-55034-5. OCLC 62080932.

{{cite book}}: CS1 maint: multiple names: authors list (link) CS1 maint: numeric names: authors list (link)

Further reading

- Johnson, N. L.; Kotz, S.; Kemp, A. (1993). Univariate Discrete Distributions (2nd ed.). Wiley. p. 36. ISBN 0-471-54897-9.

| Theory of probability distributions | ||

|---|---|---|

| ||

defined by

defined by

, where

, where  is a

is a  can also be simplified as

can also be simplified as  .

.

for all other x

for all other x .

.

can be seen as a special case of two more general measure theoretic constructions:

the

can be seen as a special case of two more general measure theoretic constructions:

the  is a

is a  is a measurable space whose underlying

is a measurable space whose underlying  . In this setting, a random variable

. In this setting, a random variable  is discrete provided its image is countable.

The

is discrete provided its image is countable.

The  —called a distribution of

—called a distribution of  since

since  for each

for each  .

.

is a

is a  of

of  and

and

may be defined for all

may be defined for all  for all

for all  as shown in the figure.

as shown in the figure.

is one. Consequently, the probability mass function is zero for all but a countable number of values of

is one. Consequently, the probability mass function is zero for all but a countable number of values of  means that the casual event

means that the casual event  is certain (it is true in the 100% of the occurrencies); on the contrary,

is certain (it is true in the 100% of the occurrencies); on the contrary,  means that the casual event

means that the casual event  .

.

is the sample space of all outcomes of a single toss of a fair coin, and

is the sample space of all outcomes of a single toss of a fair coin, and

.

.  .

.